Documentation/4.10/FAQ

|

For the latest Slicer documentation, visit the read-the-docs. |

Contents

- 1 User FAQ: Installation & Generic

- 1.1 What is Slicer ?

- 1.2 Where can I download Slicer?

- 1.3 Where can I download older release of Slicer ?

- 1.4 Does Slicer work for non-English computing?

- 1.5 How to install Slicer ?

- 1.6 Is Slicer really free?

- 1.7 Where can I find someone to help me use Slicer?

- 1.8 Can I use slicer for patient care?

- 1.9 How to cite Slicer?

- 1.10 How do I create an account for the Slicer wiki?

- 1.11 Can I install Slicer without administrator rights?

- 1.12 What if I have problems with Slicer installation?

- 1.13 Slicer does not start

- 1.14 How to uninstall Slicer?

- 1.15 Where can I find Slicer tutorials?

- 1.16 I read errors in the logs complaining about memory

- 1.17 Which Slicer version should I use: 3.X or 4.X ?

- 1.18 What is my HOME folder ?

- 2 User FAQ: Slicer BarCamp

- 2.1 What is the 3DSlicer BarCamp ?

- 2.2 How to join the 3DSlicer BarCamp ?

- 2.3 How to know if a hangout is scheduled ?

- 2.4 Is a mobile phone required to attend the hangout ?

- 2.5 Is the hangout free of charge ?

- 2.6 Moderators: How to approve/reject requests ?

- 2.7 Moderator: How to schedule an hangout ?

- 3 User FAQ: Slicer Wiki

- 4 User FAQ: User Interface

- 5 User FAQ: Extensions

- 5.1 What is an extension ?

- 5.2 What is the extensions catalog ?

- 5.3 Why there are no windows 32-bit extensions available ?

- 5.4 Should I install the nightly version to access to last extension updates ?

- 5.5 How to update an already installed extension?

- 5.6 How to manually download an extension package?

- 5.7 How to manually install an extension package?

- 5.8 How to create a custom Slicer version with selected extensions pre-installed?

- 6 User FAQ: DICOM

- 6.1 What is DICOM anyway?

- 6.2 How do I know if the files I have are stored using DICOM format? How do I get started?

- 6.3 When I click on "Load selection to slicer" I get an error message "Could not load ... as a scalar volume"

- 6.4 I try to import a directory of DICOM files, but nothing shows up in the browser

- 6.5 Something is displayed, but it is not what I expected

- 7 User FAQ: Viewing and Resampling

- 8 User FAQ: Registration

- 8.1 Spatial Orientation, Header, Image Size

- 8.1.1 How do I fix incorrect axis directions? Can I flip an image (left/right, anterior/posterior etc) ?

- 8.1.2 How do I fix a wrong image orientation in the header? / My image appears upside down / facing the wrong way / I have incorrect/missing axis orientation

- 8.1.3 Can I undo the "centering" of an image

- 8.1.4 I have some DICOM images that I want to reslice at an arbitrary angle

- 8.1.5 How do I fix incorrect voxel size / aspect ratio of a loaded image volume?

- 8.1.6 The registration transform file saved by Slicer does not seem to match what is shown

- 8.1.7 I don't understand your coordinate system. What do the coordinate labels R,A,S and (negative numbers) mean?

- 8.1.8 My image is very large, how do I downsample to a smaller size?

- 8.2 Errors

- 8.2.1 Registration failed with an error. What should I try next?

- 8.2.2 Registration result is wrong or worse than before?

- 8.2.3 Registration results are inconsistent and don't work on some image pairs. Are there ways to make registration more robust?

- 8.2.4 How do I register images that are very far apart / do not overlap

- 8.2.5 How do I initialize/align images with very different orientations and no overlap?

- 8.2.6 Can I manually adjust or correct a registration?

- 8.3 Diffusion

- 8.4 Masking

- 8.4.1 What's the purpose of masking / VOI in registration? / What does the masking option in registration accomplish ?

- 8.4.2 How do I generate a mask and what should it look like ?

- 8.4.3 Which registration methods offer masking?

- 8.4.4 Is there a function to convert a box ROI into a volume labelmap?

- 8.4.5 I have to manually segment a large number of slices. How can I make the process faster?

- 8.4.6 How can I quickly generate a mask image ?

- 8.5 Parameters & Concept

- 8.5.1 What's the difference between Rigid and Affine registration?

- 8.5.2 What's the difference between Affine and BSpline registration?

- 8.5.3 What's the difference between the various registration methods listed in Slicer?

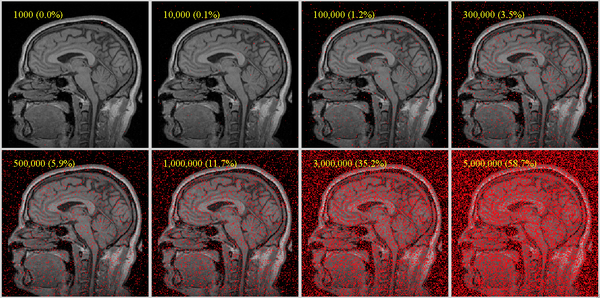

- 8.5.4 How many sample points should I choose for my registration?

- 8.5.5 Can I register 2D images with Slicer?

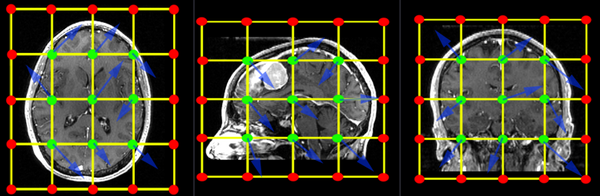

- 8.5.6 What's the BSpline Grid Size?

- 8.5.7 I want to register two images with different intensity/contrast.

- 8.5.8 How important is bias field correction / intensity inhomogeneity correction?

- 8.5.9 Have the Slicer registration methods been validated?

- 8.5.10 How can I save the parameter settings I have selected for later use or sharing?

- 8.5.11 Registration is too slow. How can I speed up my registration?

- 8.5.12 How do I register images via landmarks/fiducials?

- 8.5.13 Is the BRAINSfit registration for brain images only?

- 8.5.14 One of my images has a clipped field of view. Can I still use automated registration?

- 8.5.15 Can I combine multiple registrations?

- 8.5.16 Is there a module for surface registration?

- 8.5.17 Can I combine image and surface registration?

- 8.6 Apply, Resample, Export

- 8.6.1 I ran a registration but cannot see the result. How do I visualize the result transform?

- 8.6.2 My reoriented image returns to original position when saved; Problem with the Harden Transform function

- 8.6.3 What is the Meaning of 'Fixed Parameters' in the transform file (.tfm) of a BSpline registration ?

- 8.6.4 After registration the registered image appears cropped. How can I increase the field of view to see/include the entire image

- 8.6.5 How can I see the parameters of the function that describe a BSpline registration/deformation?

- 8.6.6 How to export the displacement magnitude of the transform as a volume?

- 8.6.7 Physical Space vs. Image Space: how do I align two registered images to the same image grid?

- 8.7 Nonrigid / BSpline Registration

- 8.7.1 The nonrigid (BSpline) registration transform does not seem to be nonrigid or does not show up correctly.

- 8.7.2 What's the difference between BRAINSfit and BRAINSDemonWarp?

- 8.7.3 How can I convert a BSpline transform into a deformation field?

- 8.7.4 Where can I check the reference color map for the transform visualizer? i.e. how can I know the magnitude of transform vectors?

- 8.1 Spatial Orientation, Header, Image Size

- 9 User FAQ: Models

- 10 User FAQ: Scripting

User FAQ: Installation & Generic

What is Slicer ?

3D Slicer is:

- A software platform for the analysis (including registration and interactive segmentation) and visualization (including volume rendering) of medical images and for research in image guided therapy.

- A free, open source software available on multiple operating systems: Linux, MacOSX and Windows

- Extensible, with powerful plug-in capabilities for adding algorithms and applications.

Features include:

- Multi organ: from head to toe.

- Support for multi-modality imaging including, MRI, CT, US, nuclear medicine, and microscopy.

- Bidirectional interface for devices.

There is no restriction on use, but Slicer is not approved for clinical use and intended for research. Permissions and compliance with applicable rules are the responsibility of the user. For details on the license see here

Where can I download Slicer?

3DSlicer is available for download by visiting the following link: http://download.slicer.org

You can also get older releases by using the offset parameter in the download page. For example, download page from 7 days ago: http://download.slicer.org/?offset=-7

To get a direct download link of previous revision on a selected operating system: http://download.slicer.org/download?os=macosx&stability=any&offset=-1

Always include these parameters:

- stability, which can be release, nightly, or any

- os, which can be win, macosx, or linux

Additional options:

- revision built before or on the date: date=2015-01-01

- same but checkout date: checkout-date=2015-02-01

- exact revision: revision=27000

- revision less than of equal: closest-revision=26000

- latest revision of 4.3 branch, can include patch as well: version=4.3

Any of these queries can be combined with the offset param, which will step forward or backward a given number of revisions. So, stability=release&version=4.5.0&offset=-1 should give you the revision just before the first 4.5 release.

Where can I download older release of Slicer ?

Older releases of 3DSlicer are available here: http://slicer.kitware.com/midas3/folder/274

Does Slicer work for non-English computing?

At this point no, Slicer uses US English conventions. Specifically this means that directory paths should use ASCII characters only.

There has been some discussion and planning about internationalization but it is not yet available:

- https://www.slicer.org/wiki/Documentation/Labs/I18N

- https://discourse.slicer.org/t/slicer-internationalization/579

How to install Slicer ?

- Go to http://download.slicer.org

- Click on the link corresponding to your operating system

-

Replace the version numbers in the commands below as appropriate

Linux Windows Mac OSX Start a terminal and type these commands:

''(replace version number as appropriate)'' $ tar xzvf ~/Downloads/Slicer-4.6.2-linux-amd64.tar.gz -C ~/ $ cd ~/Slicer-4.6.2-linux-amd64 $ ./Slicer

- Double click on the downloaded

Slicer-4.6.2-win-amd64.exepackage. - Follow instructions displayed on the screen.

- Double click on the downloaded

Slicer-4.6.2-macosx-amd64.dmgpackage - Drag & drop the

Slicer.apponto your Desktop or in your Applications. Need help?

- Double click on the downloaded

Note on some linux distributions additional packages may be required, e.g. for Slicer 4.9 on Debian Linux 9 if you get errors about missing libraries you may need to run this command:

sudo apt-get install libpulse-dev libnss3 libasound2

Is Slicer really free?

Yes, really, truly, free. Not just a free trial. No pro version with all the good stuff. Slicer is free with no strings attached. You can even re-use the code in any way you want with no royalties and you don't even need to ask us for permission. (Of course we're always happy to hear from people who've found slicer interesting).

See the Slicer License page for the legal version of this.

Where can I find someone to help me use Slicer?

We rely on the community of users and developers to share their expertise. Slicer Community support and development discussions are hosted on Discourse, which provides a modern web forum as well as email-only interaction:

https://discourse.slicer.org

If you post to any public forum, be sure not to include any Protected Health Information (PHI) or any other data that would get you or anyone else in trouble. However, posting example data can be very important to people who are interested in helping you solve your problems. If you can replicate your question using data from the Sample Data or Data Store modules that's the first choice.

The Users Manual gives descriptions for using each module, and you can check out the Training pages for in depth tutorials about workflows.

Mailing list discussions before 2017 April are archived

Can I use slicer for patient care?

Slicer is intended for research work and has no FDA clearances or approvals of any kind. It is the responsibility of the user to comply with all laws and regulations (and moral/ethical guidelines) when using slicer.

How to cite Slicer?

3D Slicer as a Platform

To acknowledge 3D Slicer as a platform, please cite the Slicer web site and the following publications when publishing work that uses or incorporates 3D Slicer:

- An overview of Slicer and its history

- Kikinis R, Pieper SD, Vosburgh K (2014) 3D Slicer: a platform for subject-specific image analysis, visualization, and clinical support. Intraoperative Imaging Image-Guided Therapy, Ferenc A. Jolesz, Editor 3(19):277–289 ISBN: 978-1-4614-7656-6 (Print) 978-1-4614-7657-3 (Online)

- About Project Week

- Kapur, Tina; Pieper, Steve; Fedorov, Andriy; Fillion-Robin, J-C; Halle, Michael; O'Donnell, Lauren; Lasso, Andras; Ungi, Tamas; Pinter, Csaba; Finet, Julien; Pujol, Sonia; Jagadeesan, Jayender; Tokuda, Junichi; Norton, Isaiah; Estepar, Raul San Jose; Gering, David; Aerts, Hugo J W L; Jakab, Marianna; Hata, Nobuhiko; Ibanez, Luiz; Blezek, Daniel; Miller, Jim; Aylward, Stephen; Grimson, W Eric L; Fichtinger, Gabor; Wells, William M; Lorensen, William E; Schroeder, Will; Kikinis, Ron; 2016. “Increasing the Impact of Medical Image Computing Using Community-Based Open-Access Hackathons: The NA-MIC and 3D Slicer Experience.” Medical Image Analysis 33 (October): 176–80.

- Slicer 4

- Fedorov A., Beichel R., Kalpathy-Cramer J., Finet J., Fillion-Robin J-C., Pujol S., Bauer C., Jennings D., Fennessy F.M., Sonka M., Buatti J., Aylward S.R., Miller J.V., Pieper S., Kikinis R. 3D Slicer as an Image Computing Platform for the Quantitative Imaging Network. Magn Reson Imaging. 2012 Nov;30(9):1323-41. PMID: 22770690. PMCID: PMC3466397.

- Slicer 3

- Pieper S, Lorensen B, Schroeder W, Kikinis R. The NA-MIC Kit: ITK, VTK, Pipelines, Grids and 3D Slicer as an Open Platform for the Medical Image Computing Community. Proceedings of the 3rd IEEE International Symposium on Biomedical Imaging: From Nano to Macro 2006; 1:698-701.

- Pieper S, Halle M, Kikinis R. 3D SLICER. Proceedings of the 1st IEEE International Symposium on Biomedical Imaging: From Nano to Macro 2004; 1:632-635.

- Slicer 2

- Gering D.T., Nabavi A., Kikinis R., Hata N., O'Donnell L., Grimson W.E.L., Jolesz F.A., Black P.M., Wells III W.M. An Integrated Visualization System for Surgical Planning and Guidance using Image Fusion and an Open MR. J Magn Reson Imaging. 2001 Jun;13(6):967-75. PMID: 11382961.

- Gering D.T., Nabavi A., Kikinis R., Grimson W.E.L., Hata N., Everett P., Jolesz F.A., Wells III W.M. An Integrated Visualization System for Surgical Planning and Guidance using Image Fusion and Interventional Imaging. Int Conf Med Image Comput Comput Assist Interv. 1999 Sep;2:809-19.

Individual Modules

To acknowledge individual modules

How do I create an account for the Slicer wiki?

Please note: You only need an account if you want to edit or add pages.

Follow the Log in->Request Account link from the upper right corner of the slicer wiki page. Once the account request is approved, you will be e-mailed a notification message and the account will be usable at log in.

Can I install Slicer without administrator rights?

The most convenient way to install Slicer is to run the installer package (Slicer-4.10....exe) as administrator. However, installation is not necessary, you just need to unpack the files in the installer package and copy them to your user directory or a USB drive and start it by running Slicer.exe.

You can unpack the installation package by one of the following methods:

- Install Slicer on any computer where you have administrator access. All the files that you need to run Slicer are in the C:\Program Files\Slicer... directory.

- Unpack the installation package by using 7zip or using the InstallExplorer plugin in Total commander or FAR manager (http://nsis.sourceforge.net/Can_I_decompile_an_existing_installer). All the Slicer files will be in the $_OUTDIR directory, except Slicer.exe, so you have to copy Slicer.exe into $_OUTDIR and run it from there. You can rename the $_OUTDIR directory and discard all the other directories ($COMMONFILES, $PLUGINSDIR, etc).

What if I have problems with Slicer installation?

You can read our guide explaining how to report a problem.

Slicer does not start

"Insufficient graphics capability" popup is displayed

In Slicer-4.9, minimum graphics requirements have been increased compared to earlier versions. Computers running Slicer must support OpenGL 3.2. This OpenGL version was released in 2009 and so all desktop and laptop computers should already support it. However, on virtual machines or when connecting to a computer via remote desktop, this minimum requirements may not be met.

- If you are trying to connect through Windows remote desktop (RDP):

- Recent Intel integrated GPUs and some NVidia GPUs (Quadro series) running on Windows 10 now support running current OpenGL versions. You may consider upgrading your hardware.

- If your computer can normally run Slicer but when started through remote desktop it displays "Insufficient graphics capability" popup: You need to start Slicer in normal desktop mode and then establish remote connection. You can achieve this by following these steps:

- Connect to the remote computer using remote desktop

- Start Slicer on the remote computer

- Click "Retry" when "Insufficient graphics capability" popup appears

- Grant administrator access in the displayed user account control popup (administrator access is needed for termination of the current remote desktop session), this will close the remote desktop connection

- Reconnect to the remote computer using remote desktop - you should see Slicer application started up successfully

Any other issues

See Documentation/4.10/Developers/Tutorials/Troubleshooting#Debugging_Slicer_application_startup_issues

How to uninstall Slicer?

- On Windows, choose "Uninstall" option from the Start menu.

- On the Mac, remove the Slicer.app file. To clean up settings, remove "~/.config/www.na-mic.org/"

- On Linux, remove the directory where the application is located. To clean up settings, remove "~/.config/NA-MIC/"

See the information about the location of Settings for all platforms. If the uninstaller is not working on windows you may need to remove the settings manually.

Where can I find Slicer tutorials?

Slicer tutorials associated with the latest 4.10 stable release are available by visiting the following link: Click Here.

I read errors in the logs complaining about memory

Errors such as “Description: Failed to allocate memory for image.” indicate that you don’t have enough memory space. This can be a common issue if you run a 32-bit version of Slicer. You cannot expect a 32-bit executable to deal with any moderately complex problem. The recommended solution is to download/build/use Slicer in 64-bit mode.

Possible workarounds:

- Use a 64-bit version of Slicer

- You have somewhat more memory if you run the module in a separate process. To do that open Edit / Application Settings / Modules and check the “Prefer Executable CLIs” option, then restart Slicer.

- Decrease the size and/or resolution of the input and output images

- Consider Crop Volume to focus on just your area of interest.

- Consider ResampleScalarVectorDWIVolume or ResampleScalarVolume increase the sample spacing (decrease the resolution) of your data.

Which Slicer version should I use: 3.X or 4.X ?

In general slicer3 and slicer4 have roughly similar functionality with respect to registration basics. Probably the most important thing to keep in mind is that slicer3 is no longer actively maintained.

Slicer4, on the other hand, has benefited from literally hundreds of bug fixes over the past several years, and typically has better features and much better performance. Also, the nightly builds of slicer4 are now using ITKv4, which has significantly improved registration code. I am told by active users/developers of ITK that ITKv4 should provide significantly better results in many cases. Also, several new registration techniques are being actively developed for slicer4.

Source: http://massmail.spl.harvard.edu/public-archives/slicer-users/2013/006190.html

What is my HOME folder ?

| Linux or MacOSX | Windows |

|---|---|

|

Start a terminal. $ echo ~ /home/jchris |

Start Command Prompt (Start Menu -> All Programs -> Accessories -> Command Prompt) > echo %userprofile% C:\Users\jcfr |

User FAQ: Slicer BarCamp

What is the 3DSlicer BarCamp ?

The 3DSlicer BarCamp is a Google Plus community to share facts, idea and news related the medical imaging field and the open-source software 3DSlicer.

It can be accessed using the following URL: https://plus.google.com/communities/105715968666294296532

How to join the 3DSlicer BarCamp ?

Join the 3DSlicer BarCamp G+ community by clicking on Join Community

How to know if a hangout is scheduled ?

You will be notified of the event when it is scheduled and when it starts.

Is a mobile phone required to attend the hangout ?

You can join using a regular computer with webcam and microphone.

Is the hangout free of charge ?

Joining the 3DSlicer community will not cost you anything, it will allow you to be aware of our weekly hangout when scheduled and post some relevant information. That will be an opportunity to have some face time with other developers and users.

By checking if people are interested in joining, we simply avoid spam and unwanted traffic.

Moderators: How to approve/reject requests ?

Starting August 24th, anyone can join the 3DSlicer BarCamp community without pre-approval. The ITK community applied the same model for quite some time without any issue, let's the experiment begin.

If you are a community moderator, you could send the following message to confirm the interest of folks that would like to join:

Make sure to update <FUTURE_MEMBER_NAME> and <YOUR_NAME>.

Dear <FUTURE_MEMBER_NAME>, You recently requested to join the 3DSlicer Barcamp community on Google plus. Before approving your request, I am reaching to you to make sure this community aligns with your interests. The main idea behind this community is to share facts, idea and news related the medical imaging field and the open-source software 3DSlicer [1][2] Let me know if you are still interested and will gladly approve your request, Best, <YOUR_NAME> on behalf of the 3D Slicer community [1] http://en.wikipedia.org/wiki/3DSlicer [2] www.slicer.org

If few days/weeks later, you haven't heard back you could send the following message:

Dear <FUTURE_MEMBER_NAME>, Not having heard back from you I will cancel your request. If you think this is a mistake, do not hesitate to contact us on the Slicer user or developer mailing list. See http://www.slicer.org Best, <YOUR_NAME> on behalf of the 3D Slicer community

Moderator: How to schedule an hangout ?

See Developer_Meetings/OrganizerInstruction

User FAQ: Slicer Wiki

How to create a wiki account ?

How to list all wiki users ?

See Users List

Moderator: How to review and approve a wiki account request ?

Prerequisites: To be notified by email each time a user account request is created, you should belong to the Administrators group.

Review the email and update the account request:

- If this is clearly spam, mark as Spam.

- If you have doubt, mark as Hold and forward the email to other administrator asking for clarification.

- If you know the person, mark as Accept to approve the request.

Notes:

- If an account is legitimate (thus approved), but there is some content that is explicitly or generally frowned upon (e.g. promotional copy) in the bio, then the reviewer/approver should make an edit to the user page with an explanation. The bio is plain text in the form.

- Was your Biography (submitted in your original account request) not included in your User page? You can see/retrieve bios at Special:UserCredentials

- We require a 50 word Biography in the account sign-up process to help us determine the authenticity and identity of the request.

User FAQ: User Interface

How to overlay 2 volumes ?

- Load the two volumes

- Use the slice viewer controls to select one of the volumes as the foreground and one as the background.

- Change the opacity of the Foreground to your liking.

- If you click on the link symbol, this happens to all viewers

How to load data from a sequence of jpg, tif, or png files?

- Choose from the menu: File / Add Data

- Click Choose File(s) to Add button and select any of the files in the sequence in the displayed dialog. Important: do not choose multiple files or the entire parent folder, just a single file of the sequence.

- Click on Show Options and uncheck the Single File option

- Click OK to load the volume

- Go to the Volumes module

- Choose the loaded image as Active Volume

- In the Volume Information section set the correct Image Spacing and Image Origin values

- Most modules require grayscale image as input. The loaded color image can be converted to a grayscale image by using the Vector to scalar volume module

Note: Consumer file formats, such as jpg, png, and tiff are not well suited for 3D medical image storage due to the following serious limitations:

- Storage is often limited to bit depth of 8 bits per channel: this causes significant data loss, especially for CT images.

- No standard way of storing essential metadata: slice spacing, image position, orientation, etc. must be guessed by the user and provided to the software that imports the images. If the information is not entered correctly then the images may appear distorted and measurements on the images may provide incorrect results.

- No standard way of indicating slice order: data may be easily get corrupted due to incorrectly ordered or missing frames.

User FAQ: Extensions

What is an extension ?

An extension could be seen as a delivery package bundling together one or more Slicer modules. After installing an extension, the associated modules will be presented to the user as built-in ones

The Slicer community maintains a website referred to as the Slicer Extensions Catalog to support finding, downloading and installing of extensions. Access to this website is integral to Slicer and facilitated by the Extensions Manager functionality built into the distributed program.

The Catalog classifies extensions into three levels of compliance:

- Category 1: Fully compliant Slicer Extensions: Slicer license, open source, maintained.

- Category 2: Open source, contact exists.

- Category 3: All other extensions (work in progress, beta, closed source etc).

To publish extensions, developers should consider reading the following pages:

What is the extensions catalog ?

The extensions catalog provides Slicer users with a convenient way to access the extensions previously uploaded on the extensions server:

- from within Slicer with the help of the extensions manager

- from the web: http://slicer.kitware.com/midas3/slicerappstore

Why there are no windows 32-bit extensions available ?

- Win 32 has a very limited amount of memory available to an application.

- Many registration and segmentation algorithms fail on that platform because they run out of memory, when used with state of large data.

- Some of these failures are just that, some can crash slicer. Even though the "real" failure is caused by overextending the capabilities of the hardware (in a way the user's fault), it appears to the user that Slicer does not work.

- If you search the archives of Slicer Users there are several such complaints until we started to discourage people to use 32 bit.

Discussion: http://massmail.spl.harvard.edu/public-archives/slicer-users/2013/006703.html

Should I install the nightly version to access to last extension updates ?

If the extension developers contributed updates for the current stable release, you don't have to install the nightly version of Slicer. You can simply update the extension. Consider reading How to update an already installed extension.

On the other hand, if the extension developers stopped to maintain the version of their extension built against the stable release (so that we can use the latest feature that will be in the next Slicer release), downloading the nightly is the only way to get the latest version of the extension.

How to update an already installed extension?

Assuming updates extensions are available for your version of Slicer, extensions can either be updated manually or automatically.

See Updating installed extensions

How to manually download an extension package?

1) Get revision associated with your install or built Slicer (Menu -> Help -> About). The revision is a number preceded by r character, for example: if the complete version string is 4.3.1-2014-09-14 r23677 the revision is 23677

2) Open the extension catalog (app store). The default Slicer extension catalog is available at: http://slicer.kitware.com/midas3/slicerappstore

3) Select operating system, bitness and enter revision in the empty textbox between the bitness selector and the searchbox. If no revision is entered then the No extensions found message will be displayed.

4) Click Download button of the selected extension to download the extension package.

How to manually install an extension package?

Option 1. Use extension manager as described above

Option 2. Use Slicer extension called "DeveloperToolsForExtensions"

Option 3. Fully manual installation:

- Extract the archive (zip or tar.gz) in a folder. You should then have a folder like:

/path/to/<slicer_revision>-<os>-<bitness>-<extension_name>-<extension_scm><extension_revision>-YYYY-MM-DD

containing one or more of the following folders (for more information on folder structure, click here):

lib/Slicer-X.Y/cli-modules lib/Slicer-X.Y/qt-loadable-modules lib/Slicer-X.Y/qt-scripted-modules

- In the Module settings (Menu -> Edit -> Settings), add all existing paths ending with:

lib/Slicer-X.Y/cli-modules lib/Slicer-X.Y/qt-loadable-modules lib/Slicer-X.Y/qt-scripted-modules

Note: additional module paths can be added temporarily by starting Slicer with the option --additional-module-paths.

How to create a custom Slicer version with selected extensions pre-installed?

1) Download and install Slicer

2) Install all necessary extensions manually (as described above) in <slicer_install_dir>/lib/Slicer-X.Y/...

3) If all the files in <slicer_install_dir> are copied to any other folder/computer/USB drive/portable storage device then Slicer can be launched by running the Slicer executable in the main directory. No installation or administrative access rights are necessary. Slicer can even be launched directly from a USB drive, without copying files to the computer.

User FAQ: DICOM

What is DICOM anyway?

Digital Imaging and Communications in Medicine (DICOM) is the standard for the communication and management of medical imaging information and related data (see Wikipedia article here). Among other things, DICOM defines format of objects for communicating images and image-related data. In most cases, imaging equipment (CT and MR scanners) used in the hospitals will generate images saved as DICOM objects.

DICOM organizes data following the hierarchy of

- Patient ... can have 1 or more

- Study (single imaging exam encounter) ... can have 1 or more

- Series (single image acquisition, most often corresponding to a single image volume) ... can have 1 or more

- Instance (most often, each Series will contain multiple Instances, with each Instance corresponding to a single slice of the image)

- Series (single image acquisition, most often corresponding to a single image volume) ... can have 1 or more

- Study (single imaging exam encounter) ... can have 1 or more

As a result of imaging exam, imaging equipment generates DICOM files, where each file corresponds to one Instance, and is tagged with the information that allows to determine the Series, Study and Patient information to put it into the proper location in the hierarchy.

There is a variety of DICOM objects defined by the standard. Most common object types are those that store the image volumes produced by the CT and MR scanners. Those objects most often will have multiple files (instances) for each series. Image processing tasks most often are concerned with analyzing the image volume, which most often corresponds to a single Series. The first step working with such data is to load that volume into 3D Slicer.

How do I know if the files I have are stored using DICOM format? How do I get started?

DICOM files do not need to have a specific file extension, and it may not be straightforward to answer this question easily. However, if you have a dataset produced by a clinical scanner, it is most likely in the DICOM format. If you suspect your data might be in DICOM format, it might be easiest to try to load it as DICOM:

1) drag and drop the directory with your data into Slicer window. You will get a prompt "Select a reader to use for your data? Load directory into DICOM database." Accept that selection. You will see a progress update as the content of that directory is being indexed. If the directory contained DICOM data, and import succeeded, at the completion you will see the message of how many Patient/Study/Series/Instance items were successfully imported.

2) Once import is completed, you will see the window of the DICOM Browser listing all Patients/Studies/Series currently indexed. You can next select individual items from the DICOM Browser window and load them.

3) Once you load the data into Slicer using DICOM Browser, you can switch to the "Data" module to examine the content that was imported.

When I click on "Load selection to slicer" I get an error message "Could not load ... as a scalar volume"

A common cause of loading failure is corruption of the DICOM files by incorrect anonymization. Patient name, patient ID, and series instance UID fields should not be empty or missing (the anonymizer should replace them by other valid strings). Try to load the original, non-anonymized sequence and/or change your anonymization procedure.

If none of the above helps then check the Slicer error logs and report the error on the Slicer forum. If you share the data (e.g., upload it to Dropbox and add the link to the error report) then Slicer developers can reproduce and fix the problem faster.

I try to import a directory of DICOM files, but nothing shows up in the browser

DICOM is a complex way to represent data, and often scanners and other software will generate 'non-standard' files that claim to be DICOM but really aren't compliant with the specification. In addition, the specification itself has many variations and special formats that Slicer is not able to understand. Slicer is used most often with CT and MR DICOM objects, so these will typically work.

If you have trouble importing DICOM data here are some steps to try:

- Make sure you are following the DICOM module documentation.

- Try using the latest nightly build of Slicer.

- To confirm that your installation of Sicer is reading data correctly, try loading other data, such as this anonymized sample DICOM series (CT scan)

- Try import using different DICOM readers: in Application settings / DICOM / DICOMScalarVolumePlugin / DICOM reader approach: switch from DCMTK to GDCM (or GDCM to DCMTK), restart Slicer, and attempt to load the data set again.

- Try the DICOM Patcher module

- Review the Error Log for information.

- Try loading the data by selecting one of the files in the Add Data Dialog. Note: be sure to turn on Show Options and then turn off the Single File option in order to load the selected series as a volume

- If you are still unable to load the data, you may need to find a utility that converts the data into something Slicer can read. Sometimes tools like FreeSurfer, FSL or MRIcron can understand special formats that Slicer does not handle natively. These systems typically export NIfTI files that slicer can read.

- If you are sure that the DICOM files do not contain patient confidential information, you may post a sample dataset on a web site and ask for help from Slicer forum. Please be careful not to accidentally reveal private health information. If you want to remove identifiers from the DICOM files you may want to look at DicomCleaner or the RSNA Clinical Trial Processor software.

- Try moving the data and the database directory to a path that includes only US English characters (ASCII) to avoid possible parsing errors.

Something is displayed, but it is not what I expected

When you load a study from DICOM, it may contain several data sets and by default Slicer may not show the data set that you are most interested in. Go to Data module / Subject hierarchy section and click the "eye" icons to show/hide loaded data sets. You may need to click on the small rectangle icon ("Adjust the slice viewer's field of view...") on the left side of the slice selection slider after you show a volume.

User FAQ: Viewing and Resampling

What coordinate systems does Slicer use?

See this documentation of Slicer coordinate systems.

Is there a global coordinate system ?

There is no coordinate system in Slicer that is called Global. There is one called RAS. When you open Slicer, the middle of the pink wireframe box in the 3D view is at the origin of RAS. When a medical image is loaded in Slicer, it is oriented relative to the RAS coordinate system so the right hand of the patient is towards the R (first coordinate), the front of the patient is towards A (second coordinate). It's a right handed system.

To visualize "something", it is usually most convenient of to transform it to RAS, so it shows up relative to CT or MR images loaded in Slicer. When hovering the mouse over an image slice (2D), the RAS coordinates are displayed in the Data Probe located in lower left corner of the Slicer UI.

Source: http://slicer-devel.65872.n3.nabble.com/Reference-System-tp4031501p4031503.html

How can I use the reformat widget view to resample my images?

You can do something like this in the python console:

red = getNodes('vtkMRMLSliceNode*')['Red']

m = vtk.vtkMatrix4x4()

m.DeepCopy(red.GetSliceToRAS())

m.Invert()

t = slicer.vtkMRMLLinearTransformNode()

t.SetAndObserveMatrixTransformToParent(m)

Then put your volume under “t" or provide "t" as the transform to one of the Resample modules.

User FAQ: Registration

Spatial Orientation, Header, Image Size

How do I fix incorrect axis directions? Can I flip an image (left/right, anterior/posterior etc) ?

Sometimes the header information that describes the orientation and size of the image in physical space is incorrect or missing. Slicer displays images in physical space, in a RAS orientation. If images appear flipped or upside down, the transform that describes how the image grid relates to the physical world is incorrect. In proper RAS orientation, a head should have anterior end at the top in the axial view, look to the left in a sagittal view, and have the superior end at the top in sagittal and coronal views.

Yes, you can flip images and change the axis orientation of images in slicer. But we urge to use great caution when doing so, since this can introduce subtantial problems if done wrong. Worse than no information is wrong information. Below the steps to flip the LR axis of an image:

You may skip steps 5-8 below and download predefined transforms here. To apply those unzip, drag & drop into Slicer and drag your volume inside the transform.

- Go to the Data module, right click on the node labeled "Scene" and select "Insert Transform" from the pulldown menu

- You should see a new transform node being added to the tree, named "LinearTransform_1" or similar.

- left click on the volume you wish to flip, and drag it onto the new transform node. You should see a "+" appear in front of the transform node, and clicking on it should reveal the volume now inside/under that transform.

- make sure you have the image you wish to flip selected and visible in the slice views, preferably all 3 views (sagittal, coronal, axial).

- Switch to the Transforms module and (if not selected already) select the newly created transform from the Active Transform menu.

- Under Transform Matrix you see a 4x4 array of ones and zeros. Each row represents an axis direction. We will switch the axis direction by changing the sign of one of the 1s.

- e.g. to flip left/right: double-click inside the top left field where you see a 1. The number should be high-lighted and change to 1.0

- replace the 1.0 with "-1.0", then hit the RETURN key. You should see a flip immediately, assuming you have the volume in the proper view. Depending on which of the axes you want flipped, select the 1 in one of the other rows.

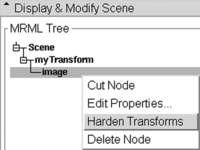

- When you have the result you want, return to the Data module, there right click on your image and select Harden Transform from the pulldown menu.

- The image will move back outside the transform onto the main level, indicating that your change of axis orientation has now been applied. Note there is no Undo for this step. If you change your mind you have to apply the same flip again or reload your volume.

- Note that the flip will likely also cause a shift, depending on your image origin. You may choose to recenter your image. To do so go to the Volumes module, open the Volume Information tab and click on the Center Volume button

- Note that this change was applied to the header information that stores the physical orientation, not the image data itself. Hence you will only see this flip in software that reads and accounts this header orientation info.

- Save your image under a new name, do not use a format that doesn't store physical orientation info in the header (jpg, gif etc).; also consider saving the transform as documentation to what change you have applied. You can also use these saved transforms as templates to quickly flip an image.

- Again: the change is saved as part of the image orientation info and not as an actual resampling of the image, i.e. if you save your image and reload it in another software that does not read the image orientation info in the header (or displays in image space only), you will not see the change you just applied.

To flip the other axes do the same as above but edit the diagonal entries in the 2nd and 3rd row, for flipping anterior-posterior and inferior-superior directions, respectively.

How do I fix a wrong image orientation in the header? / My image appears upside down / facing the wrong way / I have incorrect/missing axis orientation

- Problem: My image appears upside down / flipped / facing the wrong way / I have incorrect/missing axis orientation

- Explanation: Slicer presents and interacts with images in physical space, which differs from the way the image is stored by a separate transform that defines how large the voxels are and how the image is oriented in space, e.g. which side is left or right. This information is stored in the image header, and different image file formats have different ways of storing this information. If Slicer supports the image format, it should read the information in the header and display the image correctly. If the image appears upside down or with distorted aspect ratio etc, then the image header information is either missing or incorrect.

- Fix: See the FAQ above on how to flip an image axis within Slicer. You can also correct the voxel dimensions and the image origin in the Volume Information tab of the Volumes module, and you can reorient images via the Transforms module.

- To fix an axis orientation directly in the header info of an image file:

- 1. load the image into slicer (File: Add Volume, Add Data, Load Scene..)

- 2. save the image back out as NRRD (.nhdr) format.

- 3. open the .nhdr with a text editor of your choice. You should see a line that looks like this:

space: left-posterior-superior sizes: 448 448 128 space directions: (0.5,0,0) (0,0.5,0) (0,0,0.8)

- 4. the three brackets ( ) represent the coordinate axes as defined in the space line above, i.e. the first one is left-right, the second anterior-posterior, and the last inferior-superior. To flip an axis place a minus sign in front of the respective number, which is the voxel dimension. E.g. to flip left-right, change the line to

space directions: (-0.5,0,0) (0,0.5,0) (0,0,0.8)

- 5. alternatively if the entire orientation is wrong, i.e. coronal slices appear in the axial view etc., you may easier just change the space field to the proper orientation. Note that Slicer uses RAS space by default, i.e. first (x) axis = left-right, second (y) axis = posterior-anterior, third (z) axis = inferior-superior

- 6. save & close the edited .nhdr file and reload the image in slicer to see if the orientation is now correct.

Can I undo the "centering" of an image

When importing images, there's a "Center" checkbox, which if checked will reset the image origin to the center of the image grid, and ignore the image origin stored in the header. The same function is available to loaded images in the Volumes module (Volume Information Tab). Results derived from images have their spatial info stored relative to that image origin. So fiducial points or label maps obtained from centered images will also be centered, which means they will align with a centered version of the image but not the original one. Is there a way to return such data to the original uncentered position?

There is no dedicated module or function for that purpose currently implemented, but there are several ways to return data to the position before centering, provided the original image with the old origin is still available. Options are:

- copy the image origin (or entire spatial orientation info) from the original reference image into the header of the "centered" image. For images stored in a format where the header data is accessible in text format this is fairly straightforward. For other formats with binary headers it will require dedicated software to read the header and re-save the image.

- create a transform that embodies the shift and apply it to the data. This is probably the most accessible solution. It will work for all forms of data, i.e. fiducial points, labelmaps, surface models etc. To manually obtain such a transform:

- Load both original and centered image

- Go to the Volumes module, open the Volume Information Tab, then select either image and record the "Image Origin" information displayed. Calculate the difference of the two origins (x1-x2, y1-y2, z1-z2).

- Go to the Transforms module , create a new transform, then enter the origin difference calculated above into the fields for translation (LR= left-right, PA=posterior-anterios, IS=inferior-superior). Note that to replicate the effect of centring the translation vector is centered-uncentered; to go back from centered to uncentered is the inverse of that transform, i.e. uncentered-centered. The "Invert Transform" button in the transforms module lets you switch between the two.

- Go to the Data module. Drag the centered image and any data derived from it 'into the transform.

- Set fore- & background to original and centered image to verify, set the fade slider halfway so you can see both images. Verify that the two images align once the "centered" image has been placed into the transform.

- Right click on the meta data (e.g. fiducials) inside the transform, select Harden Transform from the pulldown menu. The data node will move back out of the transform to the main level to indicate the transform has been applied.

- Rename the data node (double click) to document that it has shifted. Then save it as a new file.

See here for a screencast describing this procedure to "uncenter" and shift meta data back to the original position.

For large sets of images calculating the offset manually may not be feasible. Below is a rudimentary python script that will read two or more images (NIfTI format) , calculate the offset and save it as an ITK transform (.tfm) file. You can then import this transform into Slicer and apply it to the data that needs shifting.

- As another alternative you can also run a quick automated registration of the centered to the uncentered image to obtain a transform. Note that this will look for a match based on image similarity and will not be 100% precise, but likely very close.

#! /usr/bin/env python # reads 2 or more NIfTI images and extracts the image center offset to the first as an ITK transform file v1.0 # usage: NIICenterOffset2ITK.py RefImg.nii CenteredImg1.nii CenteredImg2.nii ... # output: CenteredImg1_center.tfm CenteredImg2_center.tfm ...

import nibabel as nib

import numpy

import sys

refimg = nib.load(sys.argv[1])

refhdr = refimg.get_header()

reforigin=refhdr.get_qform()[([0,1,2],3)].astype(float)

for aImgName in sys.argv[2:]:

img = nib.load(aImgName)

hdr = img.get_header()

origin=hdr.get_qform()[([0,1,2],3)].astype(float)

offset=reforigin-origin

itk_file = open(aImgName+'.centered.tfm', "w")

itk_file.write('#Insight Transform File V1.0\n#Transform 0\nTransform: AffineTransform_double_3_3\n' )

itk_file.write('Parameters: 1 0 0 0 1 0 0 0 1 %f %f %f\n' %( tuple( (reforigin-origin).tolist())) )

itk_file.write('FixedParameters: 0 0 0\n')

itk_file.close()

I have some DICOM images that I want to reslice at an arbitrary angle

There's several ways to go about this. If you wish to register your image to another reference/target image, run one of the automated registration methods. If you wish to realign manually, most efficient way is to use the Transforms module. Once you have the desired orientation, you need to apply the new orientation to the image. You can do this in 2 ways: 1) without or 2) with resampling the image data.

- Without resampling: In the Data module, select the image (inside the transforms node) and select "Harden Transforms" from the pulldown menu. This will write the new orientation in physical space into the image header. This will work only if other software you use and the image format you save it as support this form of orientation information in the image header.

- With resampling: Go to the ResampleScalarVectorDWIVolume module and create a new image by resampling the original with the new transform. This will incur interpolation blurring but is guaranteed to transfer for all image formats or software.

For more details on manual transform, see this FAQ and the here.

How do I fix incorrect voxel size / aspect ratio of a loaded image volume?

- Problem: My image appears distorted / stretched / with incorrect aspect ratio

- Explanation: Slicer presents and interacts with images in physical space, which differs from the way the image is stored by a set of separate information that represents the physical "voxel size" and the direction/spatial orientation of the axes. If the voxel dimensions are incorrect or missing, the image will be displayed in a distorted fashion. This information is stored in the image header. If the information is missing, a default of isotropic 1 x 1 x 1 mm size is assumed for the voxel.

- Fix: You can correct the voxel dimensions and the image origin in the Info tab of the Volumes module. If you know the correct voxel size, enter it in the fields provided (double click to edit). You should see the display update immediately. Ideally you should try to maintain the original image header information from the point of acquisition. Sometimes this information is lost in format conversion. Try an alternative converter or image format if you know that the voxel size is correctly stored in the original image. Alternatively you can try to edit the information in the image header, e.g. save the volume as (NRRD (.nhdr) format and open the ".nhdr" file with a text editor. See FAQ above.

The registration transform file saved by Slicer does not seem to match what is shown

When executing the following procedure:

- Create a transform.

- Adjust it by adjusting the 6 slider bars in the Transforms module.

- Save the transform as a .tfm file.

- Inspect the contents of the .tfm file in a text editor, and compare them to what is shown in the 4x4 matrix in the Transforms module.

- re-load the .tfm back into slicer and confirm you have the same data as you saved from Slicer.

You will notice that the original and re-loaded Transforms are identical, but do not match the content of the .tfm file. The issue relates to the difference between Slicer, which uses a "computer graphics" view of the world, and ITK, which uses an "image processing" view of the world. The Slicer transform hierarchy models movement of an object from one spot to another. For example, a transform that has a positive "superior" value wrapped around a volume moves the volume up in patient space.

Conversely, ITK transforms map "backwards": from the display space back to the original image. Imagine stepping sequentially through the output pixels: ITK wants to know the transform back to the input pixels used to calculate the output. Additionally ITK transforms are saved in LPS, whereas the Slicer Transform widget uses RAS coordinates.

In Summary:

- The transform represented in the widget is in RAS.

- The transform represented in the tfm file is in LPS.

- The transform represented in the file is the inverse of the transform in the widget, and has the LPS/RAS conversion applied.

- The order of the parameters in the tfm are the elements of the upper 3x3 of the transform displayed in the widget followed by the elements in the last column of the widget.

Please see this discussion for more information, and code examples:

https://www.slicer.org/wiki/Documentation/Nightly/Modules/Transforms#Transform_files

I don't understand your coordinate system. What do the coordinate labels R,A,S and (negative numbers) mean?

- It's very important to realize that Slicer displays all images in physical space, i.e. in mm. This requires orientation and size information that is stored in the image header. How that header info is set and read from the header will determine how the image appears in Slicer. RAS is the abbreviation for right, anterior, superior; indicating in order the relation of the physical axis directions to how the image data is stored.

- For a detailed description on coordinate systems see here.

My image is very large, how do I downsample to a smaller size?

Several Resampling modules provide this functionality. If you also have a transform you wish to apply to the volume, we recommend the ResampleScalarVectorDWIVolume module, or the simpler ResampleScalarVolume module. See here for an explanation and overview of Resampling tools.

Resampling in place (changing voxel size):

- 1. Go to the Volumes module

- 2. from the Active Volume pulldown menu, select the image you wish to downsample

- 3. Open the Volume Information tab. Write down the voxel dimensions (Image Spacing) and overall image size (Image Dimensions), e.g. 1.2 x 1.2 x 3 mm voxel size, 512 x 512 x 86. You will need this information to determine the amount of down-/up-sampling you wish to apply

- 4. Go to the ResampleScalarVolume module (found under All modules)

- 5. In the Spacing field, enter the new desired voxel size. This is the above original voxel size multiplied with your downsampling factor. For example, if you wish to reduce the image to half (in plane), but leave the number of slices, you would enter a new voxel size of 2.4,2.4,3.

- 6. For Interpolation, check the box most appropriate for your input data: for labelmaps check nearest Neighbor, for 3D MRI or other bandlimited signals check hamming. For most others leave the linear default. The sinc interpolator (hamming, cosine, welch) and bspline (cubic) interpolators tend to produce less blurring than linear', but may cause overshoot near high contrast edges (e.g. negative intensity values for background pixels)

- 7.Input Volume: Select the image you wish to resample

- 8. Output Volume:Select Create New Volume for output volume, then rename to something meaningful, like your input + suffix "_resampled"

- 9. Click Apply

- 10. For labelmaps: go to the Volumes module and check the Labelmap box in the info tab to turn the resampled volume into a labelmap.

Resampling in place to match another image in size:

- 1. Go to the ResampleScalarVectorDWIVolume module

- 2. Input Volume: Select the image you wish to resample

- 3. Output Volume: Select Create New Volume for output volume, and rename to something meaningful, like your input + suffix "_resampled"

- 4. Reference Volume: Select the reference image whose size/dimensions you want to match to.

- 5. Interpolation Type: check the box most appropriate for your input data: for labelmaps check nn=nearest Neighbor, for 3D MRI or other bandlimited signals check ws=windowed sinc. For most others leave the linear default. The ws and bspline (cubic) interpolators (hamming, cosine, welch) tend to produce less blurring than linear', but may cause overshoot near high contrast edges (e.g. negative intensity values for background pixels)

- 6. Click Apply. Note that if the input and reference volume do not overlap in physical space, i.e. are roughly co-registered, the resampled result may not contain any or all of the input image. This is because the program will resample in the space defined by the reference image and will fill in with zeros if there is nothing at that location. If you get an empty or clipped result, that is most likely the cause. In that case try to re-center the two volumes before resampling.

- 7. For labelmaps: go to the Volumes module and check the Labelmap box in the info tab to turn the resampled volume into a labelmap.

Resampling in place by specifying new dimensions:

- 1. Go to the ResampleScalarVectorDWIVolume module

- 2. Input Volume: Select the image you wish to resample

- 3. Output Volume: Select Create New Volume for output volume, and rename to something meaningful, like your input + suffix "_resampled"

- 4. Reference Volume: leave at "none"

- 5. Interpolation Type: check the box most appropriate for your input data: for labelmaps check nn=nearest Neighbor, for 3D MRI or other bandlimited signals check ws=windowed sinc. For most others leave the linear default. The ws and bspline (cubic) interpolators (hamming, cosine, welch) tend to produce less blurring than linear', but may cause overshoot near high contrast edges (e.g. negative intensity values for background pixels)

- 6. Click Apply. Note that if the input and reference volume do not overlap in physical space, i.e. are roughly co-registered, the resampled result may not contain any or all of the input image. This is

- 6. Manual Output Parameters: here you specify the new voxel size / spacing and dimensions. Note that you need to set both. If only the voxel size is specified, the image is resampled but retains its original dimensions (i.e. empty/zero space). If only the dimensions are specified the image will be resampled starting at the origin and cropped but not resized.

- new voxel size: calculate the new voxel size and enter in the Spacing field, as described above in in 'Resampling in place above, see step #5

- new image dimensions: enter new dimensions under Size. To prevent clipping, the output field of view FOV = voxel size * image dimensions, should match the input

- 7. leave rest at default and click Apply

- 8. For labelmaps: go to the Volumes module and check the Labelmap box in the info tab to turn the resampled volume into a labelmap.

Errors

Registration failed with an error. What should I try next?

- Problem: automated registration fails, status message says "completed with error" or similar.

- Explanation: Registration methods are mostly implemented as commandline modules, where the input to the algorithm is provided as temporary files and the algorithm then seeks a solution independently from the activity of the Slicer GUI. you will notice that you're free to continue using other Slicer functions while a registration is running. Several reasons can lead to failure, most commonly they are wrong or inconsistent input or lack of convergence if images are too far apart initially.

- check your input:

- did you provide both a fixed and a moving image?

- did you select an output (transform and/or new output volume)?

- do the two images have any overlap? Can you see them both in the slice views? If not try to recenter (see "Manual Recenter" in FAQ above). When rerunning the registration, try selecting an initializer

- are inputs consistent? E.g. General Registration (BRAINS) module will complain if you check a "BSpline" registration phase but do not select a BSpline output transform, or if you request masking and do not specify masking input or output.

- if above checks reveal nothing, open the Error Log window (Window Menu) and scroll to the bottom to see the most recent entries related to the registration. Usually you will see a commandline entry that shows which arguments were given to the algorithm, and a standard output or similar that lists what the algorithm returned. More detailed error info can be found in either this entry, or in the ERROR: ..." line at the top of the list. Click on the corresponding line and look for explanation in the provided text. If there was a problem with the input arguments or the that would be reported here.

- If the Error log does not provide useful clues, try varying some of the parameters. Note that if the algorithm aborts/fails right away and returns immediately with an error, most likely some input is wrong/inconsistent or missing.

- check the initial misalignment, if images are too far apart and there is no overlap, registration may fail. Consider initialization with a prior manual alignment, centering the images or using one of the initialization methods provided by the modules

- write to the Slicer user group (slicer-users@bwh.harvard.edu) and inform them of the error. We're keen on learning so we can improve the program. The fastest and best reply you will get if you copy and paste the error messages found in the Error Log into your mail.

Registration result is wrong or worse than before?

- Problem: automated registration provides an alignment that is insufficient, possibly worse than the initial position

- Explanation: The automated registration algorithms (except for fiducial and manual registration) in Slicer operate on image intensity and try to move images so that similar image content is aligned. This is influenced by many factors such as image contrast, resolution, voxel anisotropy, artifacts such as motion or intensity inhomogeneity, pathology etc, the initial misalignment and the parameters selected for the registration.

- re-run the registration with parameter modifications:

- if images have little initial overlap or are far apart in orientation, try a (different) initializer: e.g. General Registration (BRAINS) module has several initializers, details in their documentation and also in this FAQ above.

- do the two images have any overlap? Can you see them both in the slice views? If not try to recenter (see "Manual Recenter" in FAQ above). When

- try a lower DOF registration first to see if that fails. If the lower DOF fails, subsequent ones will also. For nonrigid registration, try adding intermediate steps, such as a similarity (7 DOF) or Affine (12 DOF) transform.

- if automated initializers do not help, try a manual initial alignment (see FAQ above). This need not be perfect, as long as it establishes good overlap and roughly same direction. Then try rerunning the registration using the manual transform as a starting point. (Initialization Transform) in the General Registration (BRAINS) module.

- if initial overlap is ok but registration "drifts away", there is either insufficient sample data or distracting image content. Try increasing the number of sample points. See FAQ below for estimates of sample points.

- insufficient contrast: consider adjusting the Histogram Bins (where avail.) to tune the algorithm to weigh small intensity variations more or less heavily

- strong anisotropy:' if one or both of the images have strong voxel anisotropy of ratios 5 or more, rotational alignment may become increasingly difficult for an automated method. Consider increasing the sample points and reducing the Histogram Bins.

- distracting image content: pathology, strong edges, clipped FOV with image content at the border of the image can easily dominate the cost function driving the registration algorithm. Masking is a powerful remedy for this problem: create a mask (binary labelmap/segmentation) that excludes the distracting parts and includes only those areas of the image where matching content exists. This requires one of the modules that supports masking input, such as General Registration (BRAINS) module or Expert Automated Registration module . Next best thing to use with modules that do not support masking is to mask the image manually and create a temporary masked image where the excluded content is set to 0 intensity; the MaskScalarVolume module performs this task.

- you can adjust/correct an obtained registration manually, within limits, as outlined manual initial alignment in this FAQ above.

Registration results are inconsistent and don't work on some image pairs. Are there ways to make registration more robust?

The key parameters that influence registration robustness are the number of sample points, the initial degrees of freedom of the transform, the type of similarity metric and the initial misalignment and image contrast/content differences. particularly initialization methods that seek a first alignment before beginning the optimization can make things worse. If initial position is already sufficiently close (i.e. more than 70% overlap and less than 20% rotational misalignment), consider turning off initialization if available (e.g. in General Registration (BRAINS) module (under Registration menu) and the Expert Automated Registration module )

Try increasing the sample points. Guidelines on selecting sample points are given here. The degrees of freedom usually are given by the overall task and not subject to variation, but depending on initial misalignment, robustness can greatly improve by an iterative approach that gradually increases DOF rather than starting with a high DOF setting. The General Registration (BRAINS) module and Expert Automated Registration module both allow prescriptions of iterative DOF.

If using a cost/criterion function other than mutual information (MI), note that MI tends to be the most forgiving/robust toward differences in image contrast.

Also see the.

How do I register images that are very far apart / do not overlap

- Problem: when you place one image in the background and another in the foreground, the one in the foreground will not be visible (entirely) when switching bak & forth

- Explanation:Slicer chooses the field of view (FOV) for the display based on the image selected for the background. The FOV will therefore be centered around what is defined in that image's origin. If two images have origins that differ significantly, they cannot be viewed well simultaneously.

- Fix: recenter one or both images as follows:

- 1. Go to the Volumes module

- 2. Select the image to recenter from the Active Volume menu

- 3. Select/open the Volume Information tab.

- 4. Click the Center Volume button. You will notice how the Image Origin numbers displayed above the button change. If you have the image selected as foreground or background, you may see it move to a new location.

- 5. Repeat steps 2-4 for the other image volumes

- 6. In the slice view menu, click on the Fit to Window button (a small square next to the pin in the top left corner of each view)

- 7. Images should now be roughly in the same space. Note that this re-centering is considered a change to the image volume, and Slicer will mark the image for saving next time you select Save.

How do I initialize/align images with very different orientations and no overlap?

I would like to register two datasets, but the centers of the two images are so different that they don't overlap at all. Is there a way to pre-register them automatically or manually to create an initial starting transformation?

- Manual Recenter: See the FAQ above for re-centering images

- 1. Go to the Volumes module

- 2. Select the image to recenter from the Active Volume menu

- 3. Select/open the Volume Information tab.

- 4. Click the Center Volume button. You will notice how the Image Origin numbers displayed above the button change. If you have the image selected as foreground or background, you may see it move to a new location.

- 5. Repeat steps 2-4 for the other image volumes

- 6. In the slice view menu, click on the Fit to Window button (a small square next to the pin in the top left corner of each view)

- 7. Images should now be roughly in the same space. Note that this re-centering is considered a change to the image volume, and Slicer will mark the image for saving next time you select Save.

- Automatic Initialization: Most registration tools have initializers that should take care of the initial alignment in a scenario you described. However since they often are based on heuristics they may work well in some cases and not in others. The two modules that offer the most initializer options are General Registration (BRAINS) module (under Registration menu) and the Expert Automated Registration module.

- General Registration (BRAINS) module initializers:

- Initialization Transform: here you can specify a transform from which to start. You can perform a manual alignment (see here for tutorial) and then feed this as initializer here.

- Initialization Transform Mode: these options generate automated initializations for you:

- "Off assumes that the physical space of the images are close, and that centering in terms of the image Origins is a good starting point.

- useCenterOfHeadAlign: recommended for registering brain MRI where all or most of the head is within the FOV

- useMomentsAlign: recommended for image pairs with similar contrast, scale and content.

- useGeometryAlign: recommended for image pairs with similar FOV for both objects. This aligns the image grid volumes disregarding of content.

- useCenterOfROIAlign": recommended if you have a mask for each image that defines the regions you want registered. This will initialize based on those two masks.

- Tip: yo can run the registration with just the initializer to see what kind of transformation it produces. In that case select a Slicer Linear Transform output but leave all boxes under Registration Phases unchecked.

- ExpertAutomatedRegistration module initializers:

- "None directly starts with optimization from the current position

- CentersOfMass: recommended for image pairs with similar contrast, scale and content. Similar to useMomentsAlign above.

- SecondMoments: same as above, but also calculating (principal) axis directions

- Image Centers": similar to useGeometryAlign above: recommended for image pairs with similar FOV for both objects. This aligns the image grid volumes disregarding of content.

Can I manually adjust or correct a registration?

Yes for linear (rigid to affine) transforms; not without resampling for nonrigid transforms.

The automated registration algorithms (except for fiducial and surface registration) in Slicer operate on image intensity and try to move images so that similar image content is aligned. This is influenced by many factors such as image contrast, resolution, voxel anisotropy, artifacts such as motion or intensity inhomogeneity, pathology etc, the initial misalignment and the parameters selected for the registration. Before attempting manual correction, it is usually advisable to retry an automated run with modified parameters, additional initializers or masks.

you can adjust/correct an obtained registration manually, within limits. There's a brief (no sound) video that demonstrates the procedure here: Manual Registration Movie (2 min)

If the transform is linear, i.e. a rigid or affine transform, you can access the rigid components (translation and rotation) of that transform via the Transforms module. Or (maybe safer) you can create an additional new transform and nest the old one inside it. Then once you approve of the adjustment merge the two (via Harden Transform)

- Go to the Data module, right click on the node labeled "Scene" and select "Insert Transform" from the pulldown menu

- You should see a new transform node being added to the tree, named "LinearTransform_1" or similar.

- left click on the volume you wish to register, and drag it onto the new transform node. You should see a "+" appear in front of the transform node, and clicking on it should reveal the volume now inside/under that transform.

- make sure you have the image for which you wish to adjust the registration selected and visible in the slice views, preferably all 3 views (sagittal, coronal, axial).

- Switch to the Transforms module and (if not selected already) select the newly created transform from the Active Transform menu.

- adjust the translation and rotation sliders to adjust the current position. To get a finer degree of control, enter smaller numbers for the translation limits and enter rotation angles numerically in increments of a few degrees at a time

Diffusion

How do I register a DWI image dataset to a structural reference scan? (Cookbook)

- Problem: The DWI/DTI image is not in the same orientation as the reference image that I would like to use to locate particular anatomy; the DWI image is distorted and does not line up well with the structural images

- Explanation: DWI images are often acquired as EPI sequences that contain significant distortions, particularly in the frontal areas. Also because the image is acquired before or after the structural scans, the subject may have moved in between and the position is no longer the same.

- Fix: obtain a baseline image from the DWI sequence, register that with the structural image and then apply the obtained transform to the DTI tensor. The two chief issues with this procedure deal with the difference in image contrast between the DWI and the structural scan, and with the common anisotropy of DWI data.

- Overall Strategy and detailed instructions for registration & resampling can be found in our DWI registration cookbook

- you can find example cases in the DWI chapter of the Slicer Registration Case Library, which includes example datasets and step-by-step instructions. Find an example closest to your scenario and Slicer version and perform the registration steps recommended there.

Is there a way to perform an Eddy current correction on DWI in Slicer

There is a way, you need to download the GTRACT extension (you can get it from the menu View->Extension Manager) Once you have it you will see a new module category under the diffusion one called "GTRACT". Within this category there is a module called "Coregister B-values". This module takes a DWI image and outputs a DWI image in which every DWI is co-registered to one of the B0 images (by default the first one), this can be regarded as motion correction. Within this module there is a checkbox in the "Registration Parameters" section: "Eddy Current Correction". This will tune some of the registration parameters such that some Eddy Current artifacts are corrected.

Masking

What's the purpose of masking / VOI in registration? / What does the masking option in registration accomplish ?

The masking option is a very effective tool to focus the registration onto the image content that is most important. It is often the case that the alignment of the two images is more important in some areas than others. Automated registration based on image intensity is easily dominated by the portions in the image that contain the most contrast/content. If you have much content that is present in only one image, masking that area out will prevent it from leading the registration astray. Masking provides the opportunity to specify the important regions and make the algorithm ignore the image content outside the mask. This does not mean that the rest is not registered, but rather that it moves along passively, i.e. areas outside the mask do not actively contribute to the cost function that determines the quality of the match. Note the mask defines the areas to include, i.e. to exclude a particular region, build a mask that contains the entire object/image except that region.

- Note: masking within the registration is different from feeding a masked/stripped image as input, where areas of no interest have been erased. Such masking can still produce valuable results and is a viable option if the module in question does not provide a direct masking option. But direct masking by erasing portions of the image content can produce sharp edges that registration methods can lock onto. If the edge becomes dominant then the resulting registration will be only as good as the accuracy of the masking. That problem does not occur when using masking option within the module.

- The following modules currently (v.3.6.1) provide masking: