Difference between revisions of "Documentation/4.1/Modules/BRAINSFit"

m |

m (Calling module-developerinfo-UIowa) |

||

| Line 214: | Line 214: | ||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-section|Information for Developers}} | {{documentation/{{documentation/version}}/module-section|Information for Developers}} | ||

| − | {{documentation/{{documentation/version}}/module-developerinfo | BRAINSFit | | + | {{documentation/{{documentation/version}}/module-developerinfo-UIowa | BRAINSFit | Registration | See details above.}} |

Revision as of 16:23, 17 April 2012

Home < Documentation < 4.1 < Modules < BRAINSFit

Introduction and Acknowledgements

|

This work is part of the National Alliance for Medical Image Computing (NA-MIC), funded by the National Institutes of Health through the NIH Roadmap for Medical Research, Grant U54 EB005149. Information on NA-MIC can be obtained from the NA-MIC website. | |||||

|

Module Description

Use Cases

Most frequently used for these scenarios:

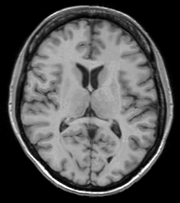

- Use Case 1: Same Subject: Longitudinal

For this use case we're registering a baseline T1 scan with a follow-up T1 scan on the same subject a year later. The two images are again available on Midas as testT1.nii.gz and testT1Longitudinal.nii.gz

First we set the fixed and moving volumes as well as the output transform and output volume names.

--fixedVolume testT1.nii.gz \ --movingVolume testT1Longitudinal.nii.gz \ --outputVolume testT1LongRegFixed.nii.gz \ --outputTransform longToBase.xform \

Since these are the same subject and very little has likely changed in the last year we'll use a Rigid registration. If the registration is poor or there are reasons to expect anatomical changes then additional transforms may be needed, in which case they can be added in a comma separated list, such as "Rigid,ScaleVersor3D,ScaleSkewVersor3D,Affine,BSpline".

--transformType Rigid \

The scans are the same modality so we'll use --histogramMatch to match the intensity profiles as this tends to help registration. If there are lesions or tumors that vary between images this may not be a good idea, as it will make it harder to detect differences between the images.

--histogramMatch \

To start with the best possible initial alignment we use --initializeTransformMode. We're working with human heads so we pick useCenterOfHeadAlign, which detects the center of head even with varying amounts of neck or shoulders present.

--initializeTransformMode useCenterOfHeadAlign \

ROI masks normally improve registration but we haven't generated any so we turn on --maskProcessingMode ROIAUTO.

--maskProcessingMode ROIAUTO \

The registration generally performs better if we include some background in the mask that way the tissue boundary is very clear. The parameter that expands the mask outside the brain is ROIAutoDilateSize (under Registration Debugging Parameters if using the GUI). These values are in millimeters so a good starting value is 3.

--ROIAutoDilateSize 3 \

Last we set the interpolation mode to be Linear, which is a decent tradeoff between quality and speed. If the best possible interpolation is needed regardless of processing time, select WindowedSync instead of linear.

--interpolationMode Linear

The full command is:

BRAINSFit --fixedVolume testT1.nii.gz \ --movingVolume testT1Longitudinal.nii.gz \ --outputVolume testT1LongRegFixed.nii.gz \ --outputTransform longToBase.xform \ --transformType Rigid \ --histogramMatch \ --initializeTransformMode useCenterOfHeadAlign \ --maskProcessingMode ROIAUTO \ --ROIAutoDilateSize 3 \ --interpolationMode Linear

- Use Case 2: Same Subject: MultiModal

For this use case we're registering a T1 scan with a T2 scan collected in the same session. The two images are again available on Midas as testT1.nii.gz and testT2.nii.gz

First we set the fixed and moving volumes as well as the output transform and output volume names.

--fixedVolume testT1.nii.gz \ --movingVolume testT2.nii.gz \ --outputVolume testT2RegT1.nii.gz \ --outputTransform T2ToT1.xform \

Since these are the same subject, same session we'll use a Rigid registration.

--transformType Rigid \

The scans are different modalities so we absolutely DO NOT want to use --histogramMatch to match the intensity profiles as this would try to map the T2 intensities into T1 intensities, resulting in an image that was neither, and hence useless.

To start with the best possible initial alignment we use --initializeTransformMode. We're working with human heads so we pick useCenterOfHeadAlign, which detects the center of head even with varying amounts of neck or shoulders present.

--initializeTransformMode useCenterOfHeadAlign \

ROI masks normally improve registration but we haven't generated any so we turn on --maskProcessingMode ROIAUTO.

--maskProcessingMode ROIAUTO \

The registration generally performs better if we include some background in the mask that way the tissue boundary is very clear. The parameter that expands the mask outside the brain is ROIAutoDilateSize (under Registration Debugging Parameters if using the GUI). These values are in millimeters so a good starting value is 3.

--ROIAutoDilateSize 3 \

Last we set the interpolation mode to be Linear, which is a decent tradeoff between quality and speed. If the best possible interpolation is needed regardless of processing time, select WindowedSync instead of linear.

--interpolationMode Linear

The full command is:

BRAINSFit --fixedVolume testT1.nii.gz \ --movingVolume testT2.nii.gz \ --outputVolume testT2RegT1.nii.gz \ --outputTransform T2ToT1.xform \ --transformType Rigid \ --initializeTransformMode useCenterOfHeadAlign \ --maskProcessingMode ROIAUTO \ --ROIAutoDilateSize 3 \ --interpolationMode Linear

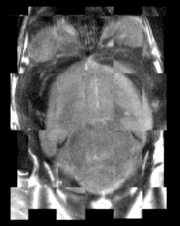

- Use Case 3: Mouse Registration

Here we'll register brains from two different mice together. The fixed and moving mouse brains used in this example are available on Midas.

First we set the fixed and moving volumes as well as the output transform and output volume names.

--fixedVolume mouseFixed.nii.gz \ --movingVolume mouseMoving.nii.gz \ --outputVolume movingRegFixed.nii.gz \ --outputTransform movingToFixed.xform \

Since the subjects are different we are going to use transforms all the way through BSpline. Again, building up transforms one type at a time can't hurt and might help, so we're including all transforms from Rigid through BSpline in the transformType parameter.

--transformType Rigid,ScaleVersor3D,ScaleSkewVersor3D,Affine,BSpline \

The scans are the same modality so we'll use --histogramMatch.

--histogramMatch \

To start with the best possible initial alignment we use --initializeTransformMode but we are't working with human heads so we can't pick useCenterOfHeadAlign. Instead we pick useMomentsAlign which does a reasonable job of selecting the centers of mass.

--initializeTransformMode useMomentsAlign \

ROI masks normally improve registration but we haven't generated any so we turn on --maskProcessingMode ROIAUTO.

--maskProcessingMode ROIAUTO \

Since the mouse brains are much smaller than human brains there are a few advanced parameters we'll need to tweak, ROIAutoClosingSize and ROIAutoDilateSize (both under Registration Debugging Parameters if using the GUI). These values are in millimeters so a good starting value for mice is 0.9.

--ROIAutoClosingSize 0.9 \ --ROIAutoDilateSize 0.9 \

Last we set the interpolation mode to be Linear, which is a decent tradeoff between quality and speed. If the best possible interpolation is needed regardless of processing time, select WindowedSync instead of linear.

--interpolationMode Linear

The full command is:

BRAINSFit --fixedVolume mouseFixed.nii.gz \ --movingVolume mouseMoving.nii.gz \ --outputVolume movingRegFixed.nii.gz \ --outputTransform movingToFixed.xform \ --transformType Rigid,ScaleVersor3D,ScaleSkewVersor3D,Affine,BSpline \ --histogramMatch \ --initializeTransformMode useMomentsAlign \ --maskProcessingMode ROIAUTO \ --ROIAutoClosingSize 0.9 \ --ROIAutoDilateSize 0.9 \ --interpolationMode Linear

Tutorials

Links to tutorials that use this module

Panels and their use

Parameters:

* '

** ':

Similar Modules

- Point to other modules that have similar functionality

References

- BRAINSFit: Mutual Information Registrations of Whole-Brain 3D Images, Using the Insight Toolkit, Johnson H.J., Harris G., Williams K., The Insight Journal, 2007.

- Source repository on github

For extensions: link to the source code repository and additional documentation

Information for Developers

| See details above. |