Difference between revisions of "Modules:GrowCutSegmentation-Documentation-3.6"

| Line 28: | Line 28: | ||

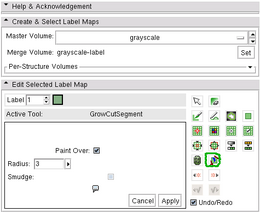

After the segmentation, the user can edit the segmentation by providing additional gestures in the image as illustrated in the figure below. | After the segmentation, the user can edit the segmentation by providing additional gestures in the image as illustrated in the figure below. | ||

{| | {| | ||

| − | |[[Image:additionalEdit.png|thumb| | + | |[[Image:additionalEdit.png|thumb|350px|Additional edits added to the existing segmentation]] |

| − | |[[Image:finalSegmentation.png|thumb| | + | |[[Image:finalSegmentation.png|thumb|350px|Final segmentation]] |

|} | |} | ||

The segmentation resulting from the user's edit are also shown. | The segmentation resulting from the user's edit are also shown. | ||

Revision as of 20:31, 21 October 2010

Home < Modules:GrowCutSegmentation-Documentation-3.6Module Name

Grow Cut Segmentation

General Information

Module Type & Category

Type: Editor Effect

Category: Segmentation

Authors, Collaborators & Contact

- Author: Harini Veeraraghavan, Jim Miller

- Contact: veerarag at ge.com

Module Description

Grow Cut Segmentation is a competitive region growing algorithm using cellular automata based on the work of 1. The algorithm works by using a set of user input scribbles for foreground and background. For N-class segmentation, the algorithm requires a set of scribbles corresponding the N classes and a scribble for a don't care class. The algorithm executes as follows:

- Using the user input scribbles, the algorithm automatically computes a region of interest that encompass the scribbles.

- Next, the algorithm iteratively tries to label all the pixels in the image using the label of pixels in the user scribbled portions of the image.

- The algorithm converges when all the pixels in the ROI are labelled, and no pixel can change it's label any more.

- Individual pixels are labeled by computing a weighted similarity metric of a pixel with all its neighbors, where the weights correspond to the neighboring pixel's strength. The neighbor that results in the largest weight greater than the given pixel's strength, confers its label to the given pixel.

After the segmentation, the user can edit the segmentation by providing additional gestures in the image as illustrated in the figure below.

The segmentation resulting from the user's edit are also shown.

Usage

- Scribble a set of foreground and background gestures.

- Specify the input volume

- Specify the output volume

A gesture volume is automatically created corresponding to the output volume and is visible in the Foreground Layer. To use a different gesture, change the output volume.

Examples, Use Cases & Tutorials

- Useful for segmenting out regions of interest in a volume (image)

Quick Tour of Features and Use

Development

Dependencies

Known bugs

Follow this link to the Slicer3 bug tracker.

Usability issues

Follow this link to the Slicer3 bug tracker. Please select the usability issue category when browsing or contributing.

Source code & documentation

Documentation:

More Information

Acknowledgment

This work is part of the National Alliance for Medical Image Computing (NAMIC), funded by the National Institutes of Health through the NIH Roadmap for Medical Research, Grant U54 EB005149. Information on the National Centers for Biomedical Computing can be obtained from National Centers for Biomedical Computing.