Difference between revisions of "Slicer3:GPURayCaster"

| Line 21: | Line 21: | ||

**views are clipped. See to the left for an example of clipping. The camera is inside the volume. This does not happen in the VTK CPU mapper. [[image:Endoscopy.png|right|400px|Clipping problem]] | **views are clipped. See to the left for an example of clipping. The camera is inside the volume. This does not happen in the VTK CPU mapper. [[image:Endoscopy.png|right|400px|Clipping problem]] | ||

**rasterplanes are oriented differently and are sparser | **rasterplanes are oriented differently and are sparser | ||

| + | ***partial mail trail: | ||

| + | ***On Tue, Mar 23, 2010 at 6:17 PM, Steve Pieper <pieper@bwh.harvard.edu> wrote: Hi Lisa - Notice how the artifacts are oriented differently: in the CPU version the contours are aligned with view space while in the GPU version they appear to be in image space (and are more visible). Also, the noisy parts of the image (say in the upper right) are more smooth in the CPU version while in the GPU version they are sharper. As far as I know the same options were used for both mappers - these images were generated with the latest slicer3 with the VTK cvs head only picking a different option from the Technique option menu. These issues are noticable when the voxel size is large relative to the screen pixel size - this situations comes up in particular for the virtual endoscopy applications.... -Steve | ||

| + | *** Hi Steve - You will see a difference in artifacts because of where the rays originate. In the CPU ray casting method, rays start at the camera position then we figure out how many steps (using the sample distance) to take before we are inside the volume. In the GPU ray cast mapper, we start the rays at the bounding box of the volume. So this will give you a slightly different pattern on your volume. The reason you are seeing these lines is that you are not sampling finely enough. In general you can use the nyquist rule to sample at 1/2 your voxel spacing - but this only works if your transfer function is just a direct mapping of scalar value to color / opacity (that is, the scalar value with some simple shift / scale yields the opacity and color) But you've probably introduced higher frequencies in your function by mapping some values to a dark color / low opacity, then some other not too distant scalar value to a bright color / high opacity. Having said that - if you are truly using the same sample distance in both mappers (can you verify this?) I don't see why the apparent spacing for the GPU mapper looks bigger than for the CPU mapper. I'll have to consult with Francois. Also - we did attempt to alleviate this issue in the GPU mapper by jittering the starting point of the ray. This should essentially add noise to the artifact (instead of lining up along obviously noticeable contour lines, the effect will be a more subtle stippling) However, we never did get that working correctly. We should probably revisit that at some point. Lisa | ||

| + | |||

*Clipping (ROIs) have some issues corresponding to backface culling turned off | *Clipping (ROIs) have some issues corresponding to backface culling turned off | ||

*Integration with dual view layout has not happened | *Integration with dual view layout has not happened | ||

Revision as of 01:45, 3 April 2010

Home < Slicer3:GPURayCaster

GPU Ray Caster

This project involves moving the GPU Ray Caster from VTKEdge into VTK, then making this volume mapper available from Slicer.

Overview of GPU Ray Casting

VTK supports several volume rendering techniques for both regular, rectilinear grids (vtkImageData), and tetrahedral meshes (represented by vtkUnstructuredGrid). Some of these volume mappers primarily utilize the CPU (relying on the GPU only for the final display of the resulting image), while other mappers make use of the resources available on the GPU such as 2D and 3D texture memory and texture mapping functionality. The new vtkKWEGPURayCastMapper in VTKEdge uses the latest advancements available on recent GPUs including fragment programs with conditional and loop operations, multi-texturing and frame buffer objects in order to deliver significantly improved performance over the CPU-based ray casting, while still maintaining high rendering quality.

The basic ray casting concept is quite simple. For each pixel in the final image a ray is traced from the camera through the pixel and into the scene. As the ray passes through a volume in the scene the scalar data is sampled along the ray and those samples are processed and combined to form a final RGBA result for the pixel. The implementation of the ray casting algorithm within an object-oriented visualization system, such as VTK, that utilizes object-order rendering (polygonal projection) for other data objects in the scene is a bit more complex, requiring the ray casting process to consider the current state of the frame buffer in order to intermix the volume data with other geometric data in the scene. In our GPU implementation of ray casting, the volume data is stored on the GPU in 3D texture memory. Ray casting is initiated by rendering a polygonal representation of the outer surface of the volume. A fragment program is executed at each pixel to traverse the data and determine a resulting value that is combined with the existing pixel value in the frame buffer computed during the opaque geometry phase of rendering.

For more detailed information, please see the July 2008 edition of The Source, which can be found here: http://kitware.com/products/archive/kitware_quarterly0708.pdf.

Open Issues

- When camera is inside the volume, the GPU mapper creates images that are different from the CPU mapper

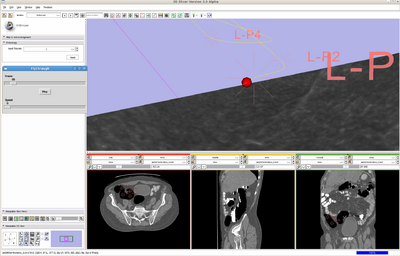

- views are clipped. See to the left for an example of clipping. The camera is inside the volume. This does not happen in the VTK CPU mapper.

- rasterplanes are oriented differently and are sparser

- partial mail trail:

- On Tue, Mar 23, 2010 at 6:17 PM, Steve Pieper <pieper@bwh.harvard.edu> wrote: Hi Lisa - Notice how the artifacts are oriented differently: in the CPU version the contours are aligned with view space while in the GPU version they appear to be in image space (and are more visible). Also, the noisy parts of the image (say in the upper right) are more smooth in the CPU version while in the GPU version they are sharper. As far as I know the same options were used for both mappers - these images were generated with the latest slicer3 with the VTK cvs head only picking a different option from the Technique option menu. These issues are noticable when the voxel size is large relative to the screen pixel size - this situations comes up in particular for the virtual endoscopy applications.... -Steve

- Hi Steve - You will see a difference in artifacts because of where the rays originate. In the CPU ray casting method, rays start at the camera position then we figure out how many steps (using the sample distance) to take before we are inside the volume. In the GPU ray cast mapper, we start the rays at the bounding box of the volume. So this will give you a slightly different pattern on your volume. The reason you are seeing these lines is that you are not sampling finely enough. In general you can use the nyquist rule to sample at 1/2 your voxel spacing - but this only works if your transfer function is just a direct mapping of scalar value to color / opacity (that is, the scalar value with some simple shift / scale yields the opacity and color) But you've probably introduced higher frequencies in your function by mapping some values to a dark color / low opacity, then some other not too distant scalar value to a bright color / high opacity. Having said that - if you are truly using the same sample distance in both mappers (can you verify this?) I don't see why the apparent spacing for the GPU mapper looks bigger than for the CPU mapper. I'll have to consult with Francois. Also - we did attempt to alleviate this issue in the GPU mapper by jittering the starting point of the ray. This should essentially add noise to the artifact (instead of lining up along obviously noticeable contour lines, the effect will be a more subtle stippling) However, we never did get that working correctly. We should probably revisit that at some point. Lisa

- Clipping (ROIs) have some issues corresponding to backface culling turned off

- Integration with dual view layout has not happened

Integration into VTK

The GPU Ray Caster was initially funded by the following SBIR projects:

"Visualization of Massive Multivariate AMR Data" National Science Foundation Contract Number OII-0548729

"Rapid, Hardware Accelerated, Large Data Visualization" Department of Defense / Army Research Laboratory Contract Number W911NF-06-C-0179

The output of these projects was a new toolkit under GPL terms called VTKEdge. As part of this project, the GPU Ray Caster will be moved out of the VTKEdge toolkit into the BSD licensed Visualization Toolkit (VTK). In the process, the names of the classes will be changed to follow VTK conventions.

This relocation is currently in process and is expected to be complete by January 11th. The new classes will be located in the VolumeRendering kit of VTK.

Integration into Slicer

The plan is to review the current Volume Renderer module and work with Yanling Liu <vrnova@gmail.com> and Alex Yarmarkovich <alexy@bwh.harvard.edu> to integrate the new mappers to the current Volume Rendering GUI, so that they can be selected at run-time.

Result March 2010

The GPU Ray Caster has been moved into VTK 5.6 and Slicer, by shifting its official revision of VTK to 5.6, has support for the VTK ray caster (vtkGPUVolumeRayCast*).

In the Volume Rendering module, an entry has been added: VTK GPU Ray Casting.