Difference between revisions of "Documentation/Nightly/Extensions/VirtualFractureReconstruction"

Kfritscher76 (talk | contribs) |

Kfritscher76 (talk | contribs) |

||

| (9 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| + | <noinclude>{{documentation/versioncheck}}</noinclude> | ||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-header}} | {{documentation/{{documentation/version}}/module-header}} | ||

| Line 9: | Line 10: | ||

Acknowledgments: | Acknowledgments: | ||

This work has been funded by the Austrian Science Fund.<br> | This work has been funded by the Austrian Science Fund.<br> | ||

| − | Author: Karl Fritscher, UMIT, BWH, MGH , contact: kfritscher@gmail.com | + | Author: Karl Fritscher, UMIT, BWH, MGH , contact: kfritscher@gmail.com<br> |

| − | Contributors: Peter Karasev, | + | Contributors: Peter Karasev, Steve Pieper, Ron Kikinis |

| Line 19: | Line 20: | ||

{| | {| | ||

The reposition of bone fragments after a fracture, a process also referred to as fracture reduction, is a crucial task during the operative treatment of complex bone fractures. The repositioning of fracture fragments often requires a trial and error approach, which leads to a significant prolongation of the surgery and causes additional trauma to the fragments and the surrounding soft tissue. Wound healing failure, infections, or joint stiffness can be the consequence. Therefore, there is a clear trend towards the development of less invasive techniques to reconstruct complex fractures. In order to support this trend, software tools for calculating and visualizing the optimal way of repositioning fracture fragments based on the usage of segmented CT images as input data have been developed. In several studies, they have successfully demonstrated their potential to decrease operation times and increase reduction accuracy. However, existing software tools are often restricted to particular types of fractures and require a large amount of user interaction.<br> | The reposition of bone fragments after a fracture, a process also referred to as fracture reduction, is a crucial task during the operative treatment of complex bone fractures. The repositioning of fracture fragments often requires a trial and error approach, which leads to a significant prolongation of the surgery and causes additional trauma to the fragments and the surrounding soft tissue. Wound healing failure, infections, or joint stiffness can be the consequence. Therefore, there is a clear trend towards the development of less invasive techniques to reconstruct complex fractures. In order to support this trend, software tools for calculating and visualizing the optimal way of repositioning fracture fragments based on the usage of segmented CT images as input data have been developed. In several studies, they have successfully demonstrated their potential to decrease operation times and increase reduction accuracy. However, existing software tools are often restricted to particular types of fractures and require a large amount of user interaction.<br> | ||

| − | Hence, the main objective of the proposed project is to overcome these limitations by developing an algorithmic pipeline that is calculating and visualizing the optimal way of repositioning fracture fragments with a minimal amount of user interaction and without restrictions to particular types of fractures. For | + | Hence, the main objective of the proposed project is to overcome these limitations by developing an algorithmic pipeline that is calculating and visualizing the optimal way of repositioning fracture fragments with a minimal amount of user interaction and without restrictions to particular types of fractures. In a two step approach, the fracture fragments will first be pre-aligned to a healthy reference bone and then aligned against each other. For these steps, texture information coming from CT images, surface properties of the bone fragments as well as prior knowledge about the shape of the healthy (=non-fractured) bone are used in order to identify candidate points for the registration using a nearest neighbor approach based on Mahalanobis distances between fragment surface points.<br> |

The module shall provide the possibility to perform a fully automated fracture reconstruction, but also to perform user guided reconstruction for very complex fractures with a large number of (small) bone fragments. | The module shall provide the possibility to perform a fully automated fracture reconstruction, but also to perform user guided reconstruction for very complex fractures with a large number of (small) bone fragments. | ||

| | | | ||

| Line 29: | Line 30: | ||

Please note that this module is under active development, and is being made available for the purposes of beta testing and feedback evaluation! The functionality, GUI and workflows may change in the subsequent releases of the module. Moreover, parts of the original algorithm are written in CUDA. Due to some limitations for Slicer extensions, this CUDA code can currently not be provided in this extension. The CUDA part of the code is implementing a more flexible registration algorithm (EM-ICP), which can potentially lead to better reconstruction results. If you are interested in using this extended version of the code, please contact me. | Please note that this module is under active development, and is being made available for the purposes of beta testing and feedback evaluation! The functionality, GUI and workflows may change in the subsequent releases of the module. Moreover, parts of the original algorithm are written in CUDA. Due to some limitations for Slicer extensions, this CUDA code can currently not be provided in this extension. The CUDA part of the code is implementing a more flexible registration algorithm (EM-ICP), which can potentially lead to better reconstruction results. If you are interested in using this extended version of the code, please contact me. | ||

| + | |||

| + | At the moment ICP and EM-ICP were used for the actual registration part. However, the module has been designed in a way that the integration of additional algorithms for point set registration can easily be added. So if you are interested in testing and/or providing suitable registration algorithms, please contact me. | ||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

| Line 34: | Line 37: | ||

The extensions provides the possibility to reconstruction/realign fracture fragments and visualize the correct reconstruction of the fracture and switch between reconstructed and not reconstructed fragment position for individual fragments. In combination with a volume rendering a better/more detailed pre-surgical planning process shall be supported. Furthermore, the optimal reconstruction provided by the extension can a also act as a reference for post-operative quality assessment of the "real" reconstruction. | The extensions provides the possibility to reconstruction/realign fracture fragments and visualize the correct reconstruction of the fracture and switch between reconstructed and not reconstructed fragment position for individual fragments. In combination with a volume rendering a better/more detailed pre-surgical planning process shall be supported. Furthermore, the optimal reconstruction provided by the extension can a also act as a reference for post-operative quality assessment of the "real" reconstruction. | ||

| − | |||

| − | |||

| − | |||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

| − | {{documentation/{{documentation/version}}/module-section| | + | {{documentation/{{documentation/version}}/module-section|Tutorial and description of panels }} |

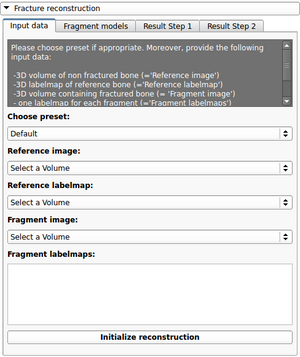

Using the first tab of the loadable module the user should provide the following input data: <br> | Using the first tab of the loadable module the user should provide the following input data: <br> | ||

-3D CT image(volume) of non fractured bone (='Reference image') <br> | -3D CT image(volume) of non fractured bone (='Reference image') <br> | ||

| Line 68: | Line 68: | ||

1) Good registration - Accept registration without performing further registration (fine tuning part will be skipped).<br> | 1) Good registration - Accept registration without performing further registration (fine tuning part will be skipped).<br> | ||

2) Good registration - Accept registration and perform fine tuning<br> | 2) Good registration - Accept registration and perform fine tuning<br> | ||

| − | 3) Registration not good enough - Go back to previous tab and alter parameters. BEFORE going back to the previous tab, the user should select which fragments shall be | + | 3) Registration not good enough - Go back to previous tab and alter parameters. BEFORE going back to the previous tab, the user should select which fragments shall be re- aligned (with different parameters). If no fragments are selected all fragments will be pre-aligned again.<br> |

4) Registration not good enough - Perform manual repositioning using transform sliders and accept registration or continue with fine tuning step.<br> | 4) Registration not good enough - Perform manual repositioning using transform sliders and accept registration or continue with fine tuning step.<br> | ||

<br> | <br> | ||

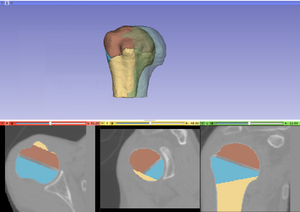

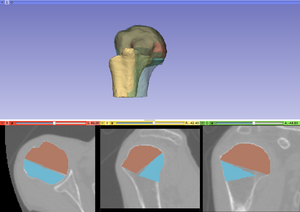

| − | In any case the user can switch between views of aligned and unaligned fragments in the 3D and 2D viewers using the "toggle aligned <--> unaligned" button. | + | In any case the user can switch between views of aligned and unaligned fragments in the 3D and 2D viewers using the "toggle aligned <--> unaligned" button. <br> |

[[File:Loadable_ResultStep1.png|300px|thumb|center|Third tab of the loadable module after first step of reconstruction is finished]] | [[File:Loadable_ResultStep1.png|300px|thumb|center|Third tab of the loadable module after first step of reconstruction is finished]] | ||

| − | [[File:Loadable_ScreenStep1.png|300px|thumb|center|Updated 2D and 3D views after first step of reconstruction | + | [[File:Loadable_ScreenStep1.png|300px|thumb|center|Updated 2D and 3D views after first step of reconstruction. In this case only 2 of the 3 fragments were selected for registration in the second ("Fragment models") tab]] |

| + | |||

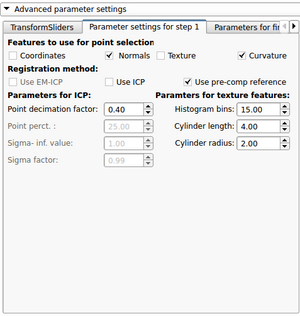

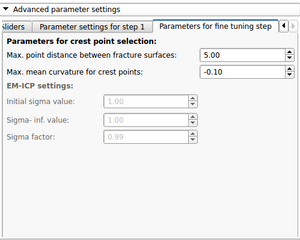

| + | By pressing the "Start fine tuning" button the second part of the reconstruction process in which the fragments will be aligned against each other (without using the reference bone) will be started. The parameters for this step can be changed in the corresponding parameter tab ("Parameters for fine tuning") | ||

| + | [[File:Loadable_Para3.png|300px|thumb|center|Parameter section for first reconstruction step]] | ||

| − | + | After the calculations for the fine tuning step are finished, the last tab will be opened ("Result step 2"). Again 2D and 3D views will be updated accordingly and the same 4 scenarios as in the previous step are possible. | |

| + | [[File:Loadable_ResultStepFinal.png|300px|thumb|center|Final tab of the loadable module after second step of reconstruction process]] | ||

| + | [[File:Loadable Screen-ResultFinal+VR.png|300px|thumb|center|Updated 2D and 3D views after final step of reconstruction. Note that the visible gaps are due to missing bone that has been "simulated" in this (toy) example. This view was generated using a wireframe representatioin of the fragments in combination with a volume rendering of CT dataset of the (healthy) reference bone ]] | ||

| − | + | |} | |

| − | + | ||

| − | |||

| − | |||

| − | |||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-section|Information for Developers}} | {{documentation/{{documentation/version}}/module-section|Information for Developers}} | ||

| − | * Source code of the module: https://github.com/ | + | * Source code of the module: https://github.com/kfritscher/VirtualFractureReconstructionSlicerExtension |

| − | |||

| − | |||

| − | |||

| − | |||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

Latest revision as of 22:28, 20 June 2013

Home < Documentation < Nightly < Extensions < VirtualFractureReconstruction

|

For the latest Slicer documentation, visit the read-the-docs. |

Introduction and Acknowledgements

|

Extension: Virtual Fracture Reconstruction

Module Description

The reposition of bone fragments after a fracture, a process also referred to as fracture reduction, is a crucial task during the operative treatment of complex bone fractures. The repositioning of fracture fragments often requires a trial and error approach, which leads to a significant prolongation of the surgery and causes additional trauma to the fragments and the surrounding soft tissue. Wound healing failure, infections, or joint stiffness can be the consequence. Therefore, there is a clear trend towards the development of less invasive techniques to reconstruct complex fractures. In order to support this trend, software tools for calculating and visualizing the optimal way of repositioning fracture fragments based on the usage of segmented CT images as input data have been developed. In several studies, they have successfully demonstrated their potential to decrease operation times and increase reduction accuracy. However, existing software tools are often restricted to particular types of fractures and require a large amount of user interaction. Hence, the main objective of the proposed project is to overcome these limitations by developing an algorithmic pipeline that is calculating and visualizing the optimal way of repositioning fracture fragments with a minimal amount of user interaction and without restrictions to particular types of fractures. In a two step approach, the fracture fragments will first be pre-aligned to a healthy reference bone and then aligned against each other. For these steps, texture information coming from CT images, surface properties of the bone fragments as well as prior knowledge about the shape of the healthy (=non-fractured) bone are used in order to identify candidate points for the registration using a nearest neighbor approach based on Mahalanobis distances between fragment surface points. The module shall provide the possibility to perform a fully automated fracture reconstruction, but also to perform user guided reconstruction for very complex fractures with a large number of (small) bone fragments. Release NotesThe extension consists of two modules. One CLI Module and one loadable module. The CLI module is providing the algorithmic core functionality for the fragment alignment process, whereas the loadable module manages the automated reconstruction pipeline and integrates user interaction. Please note that this module is under active development, and is being made available for the purposes of beta testing and feedback evaluation! The functionality, GUI and workflows may change in the subsequent releases of the module. Moreover, parts of the original algorithm are written in CUDA. Due to some limitations for Slicer extensions, this CUDA code can currently not be provided in this extension. The CUDA part of the code is implementing a more flexible registration algorithm (EM-ICP), which can potentially lead to better reconstruction results. If you are interested in using this extended version of the code, please contact me. At the moment ICP and EM-ICP were used for the actual registration part. However, the module has been designed in a way that the integration of additional algorithms for point set registration can easily be added. So if you are interested in testing and/or providing suitable registration algorithms, please contact me. Use CasesThe extensions provides the possibility to reconstruction/realign fracture fragments and visualize the correct reconstruction of the fracture and switch between reconstructed and not reconstructed fragment position for individual fragments. In combination with a volume rendering a better/more detailed pre-surgical planning process shall be supported. Furthermore, the optimal reconstruction provided by the extension can a also act as a reference for post-operative quality assessment of the "real" reconstruction.

Tutorial and description of panelsUsing the first tab of the loadable module the user should provide the following input data: -3D CT image(volume) of non fractured bone (='Reference image') After providing the input data the 'Initialize reconstruction'-button should be pressed in order to create 3D models of the labelmaps and initialize the reconstruction process If the user wants to provide a better manual interaction the transform sliders (from within the fracture reconstruction) module can be used. Better initialization will decrease the calculation times for the subsequent alignment process and potentially lead to better results. The "Reset manual transform" button to reset the postion of each fragment to the state prior to the manual interaction. After the initialization process is finished the second tab will be opened. The 2D and 3D views will be updated and a surface rendering of the fragments and reference bone will be provided. The user can change colors, opacity and visibility for each single fragment.

After the first step of the reconstruction is finished and user can inspect the intermediate result. 2D and 3D views will be update according to the calculated transformations. At this point the user, the following scenarios are possible: 1) Good registration - Accept registration without performing further registration (fine tuning part will be skipped). By pressing the "Start fine tuning" button the second part of the reconstruction process in which the fragments will be aligned against each other (without using the reference bone) will be started. The parameters for this step can be changed in the corresponding parameter tab ("Parameters for fine tuning") After the calculations for the fine tuning step are finished, the last tab will be opened ("Result step 2"). Again 2D and 3D views will be updated accordingly and the same 4 scenarios as in the previous step are possible.  Updated 2D and 3D views after final step of reconstruction. Note that the visible gaps are due to missing bone that has been "simulated" in this (toy) example. This view was generated using a wireframe representatioin of the fragments in combination with a volume rendering of CT dataset of the (healthy) reference bone

|

Information for Developers

- Source code of the module: https://github.com/kfritscher/VirtualFractureReconstructionSlicerExtension